This is Part 6 of our 6-part Deep Dive series on neuromorphic computing—the brain-inspired processors achieving 1,000× efficiency improvements over GPUs at the edge.

[🧠] Sapien Fusion Deep Dive Series | February 4, 2026 | Reading time: 5 minutes

Why Neuromorphic’s Biggest Challenge Isn’t Hardware

Intel’s Loihi 3 delivers 1,000× the efficiency of previous generations. IBM’s NorthPole achieves 72.7× better energy efficiency than GPUs. ANYmal operates 72 hours on a single charge. Mercedes-Benz targets 0.1ms perception latency. The hardware capabilities are extraordinary and validated.

The software ecosystem lags years behind.

This gap represents neuromorphic computing’s most significant barrier to mainstream adoption. Organizations can purchase processors delivering transformative efficiency, but converting existing AI models and applications requires navigating fragmented tooling, scarce expertise, and immature development workflows. The hardware revolution waits on software evolution.

The Conversion Challenge

Most AI applications run on models trained using PyTorch, TensorFlow, or similar frameworks designed for conventional processors. These models operate on continuous activation values, execute through synchronous forward passes, and assume frame-based temporal structure. Converting them to neuromorphic architectures requires fundamental algorithmic transformation.

Spiking neural networks—the computational model underlying processors like Loihi 3—communicate through discrete temporal events rather than continuous values. Neurons fire spikes when activation thresholds are exceeded, information is encoded in spike timing and frequency, and processing occurs asynchronously rather than in lockstep. This temporal dimension adds complexity absent in conventional neural networks.

The conversion process demands redefining activation functions to produce spike trains encoding equivalent information, redesigning network architectures to leverage temporal dynamics, adjusting training procedures to account for spiking neuron behavior, and validating converted models maintain accuracy within acceptable bounds.

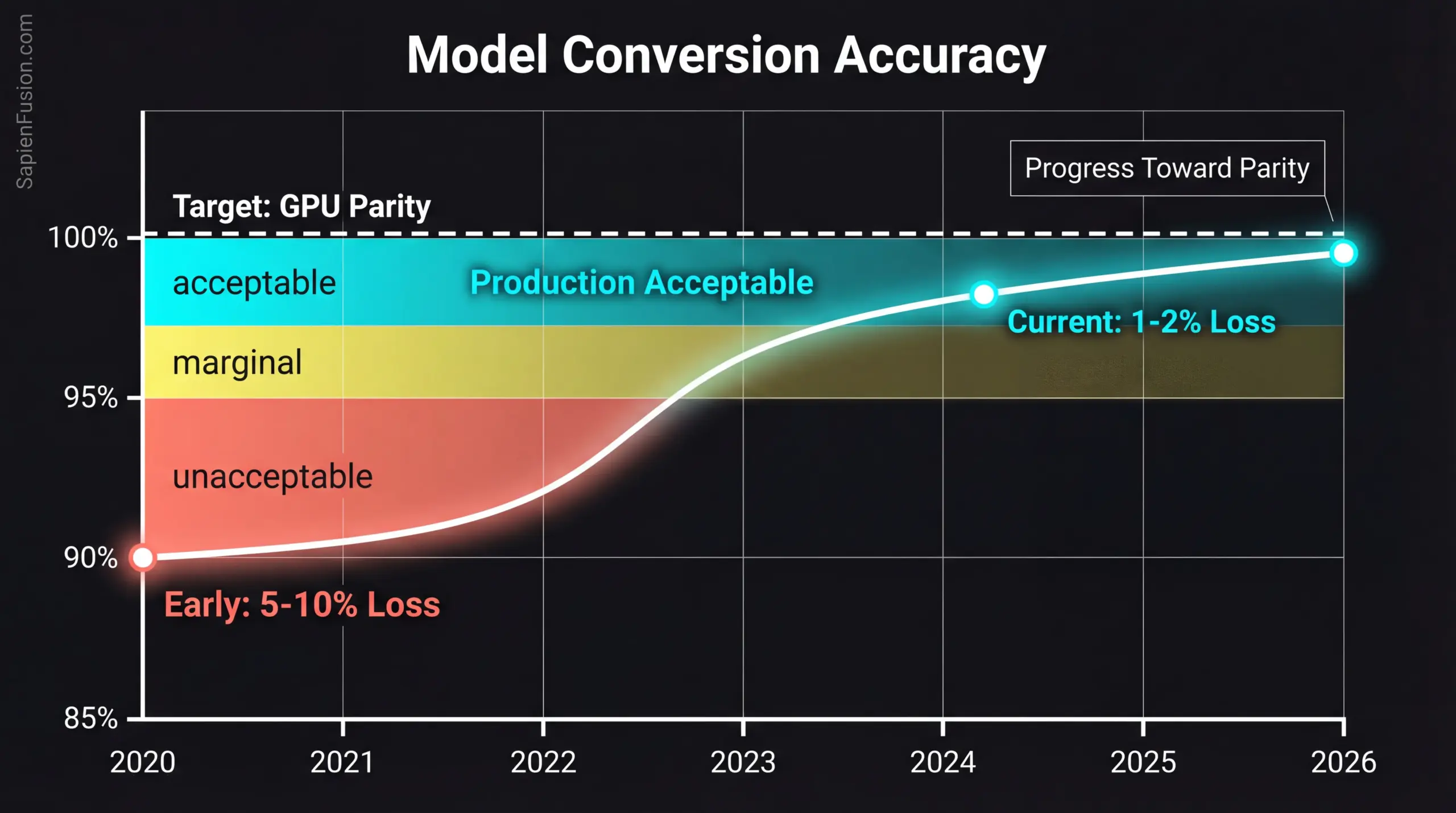

Early conversions suffered accuracy degradation, reaching 5-10% compared to original models—unacceptable for most applications. Recent algorithmic advances and improved tools reduced degradation to 1-2%, but achieving this requires significant expertise and iterative refinement. The process remains more art than engineering.

The Tool Fragmentation

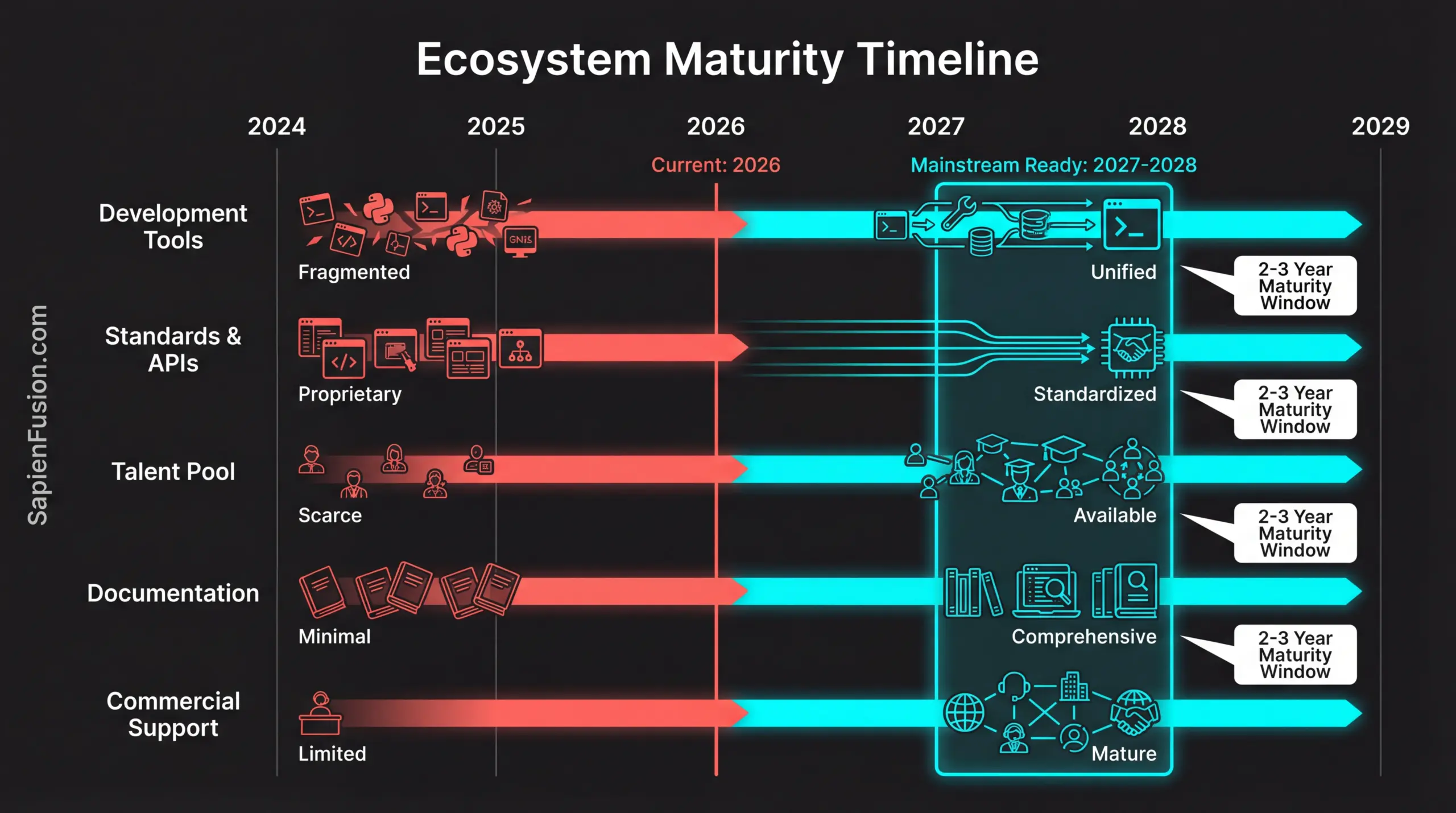

The neuromorphic ecosystem lacks the unified tooling that makes conventional AI development accessible. PyTorch and TensorFlow provide comprehensive frameworks handling model definition, training, optimization, deployment, and monitoring. Developers access extensive documentation, abundant tutorials, and massive community support.

Neuromorphic development involves navigating a fragmented landscape. Intel’s Lava framework supports Loihi processors but requires understanding neuromorphic-specific concepts. IBM’s tools for NorthPole focus on quantized model deployment rather than spiking networks. Academic frameworks like Norse, BindsNET, and Brian2 target research rather than production deployment.

No single framework provides an end-to-end workflow from model development through production deployment. Developers frequently combine multiple tools—training in conventional frameworks, converting with custom scripts, optimizing in hardware-specific environments, and deploying with vendor tools. This fragmentation increases development time and raises expertise requirements substantially.

The visualization and debugging tools lag particularly far behind. TensorBoard, Weights & Biases, and similar platforms enable inspecting conventional neural network behavior through activation visualizations, gradient monitoring, and performance profiling. Neuromorphic equivalents remain rudimentary, often requiring custom visualization code to understand temporal spike dynamics.

The Expertise Gap

The AI talent market overflows with engineers experienced in PyTorch and TensorFlow. Recruiting machine learning engineers familiar with conventional frameworks poses minimal challenge. Finding developers with neuromorphic experience requires targeting a tiny talent pool.

Universities only recently began offering neuromorphic computing courses. Most computer science and engineering programs focus entirely on conventional architectures. The few programs incorporating neuromorphic content produce limited graduates relative to industry demand.

This expertise scarcity creates several problems. Organizations adopting neuromorphic technology must either retrain existing staff—requiring months of learning curve and accepting reduced productivity during transition—or recruit scarce specialists commanding premium compensation and facing bidding wars across a limited talent pool.

The retraining path demands significant investment. Engineers must understand spiking neuron dynamics and temporal coding schemes, learn event-driven programming paradigms, master hardware-specific optimization techniques, and develop intuition for temporal network behavior. This knowledge doesn’t transfer from conventional AI experience—it requires ground-up learning.

The Algorithmic Immaturity

Conventional deep learning benefits from decades of algorithm development. Researchers discovered optimal architectures like ResNet, EfficientNet, and Transformers through extensive experimentation. Training techniques, including batch normalization, attention mechanisms, and residual connections, emerged from systematic investigation. The field established best practices and design patterns.

Neuromorphic computing lacks equivalent algorithmic maturity. Researchers still debate fundamental questions about optimal spiking network architectures, effective temporal coding schemes, and efficient training algorithms. The design space remains largely unexplored compared to conventional networks.

This immaturity manifests practically. Developers converting applications often achieve sub-optimal performance because best practices don’t exist yet. Research papers demonstrate impressive results on specific tasks but don’t generalize to broader applications. The trial-and-error required for development extends timelines unpredictably.

The academic research community actively addresses these gaps. Papers published in 2025 and early 2026 show significant advances in training algorithms, architecture search techniques, and deployment optimization. However, transitioning research insights to production-ready tools requires additional years.

The Standardization Void

The conventional AI ecosystem converged on standard interfaces and formats. ONNX enables model interchange between frameworks. TensorRT optimizes for NVIDIA hardware. CoreML targets Apple silicon. These standards reduce vendor lock-in and simplify deployment across platforms.

Neuromorphic computing lacks comparable standardization. Each vendor provides proprietary tools, formats, and interfaces. Models optimized for Loihi don’t directly deploy on NorthPole. Training procedures differ across platforms. Debugging approaches remain platform-specific.

This fragmentation increases development costs and deployment complexity. Organizations targeting multiple neuromorphic platforms must maintain separate codebases, expertise pools, and tool chains. The lack of portable models means vendor selection creates lock-in from development start rather than deployment end.

Industry consortia and standards bodies begun addressing this gap, but standardization processes require years to mature and gain adoption. The Open Neuromorphic Research Community and similar organizations work toward common interfaces, but their efforts remain early-stage.

The Timeline to Maturity

Industry consensus projects 2-3 years for the neuromorphic software ecosystem to achieve mainstream readiness. This timeline assumes automated conversion tools reaching production quality, enabling developers to port conventional models with minimal manual intervention, comprehensive frameworks providing end-to-end workflows from training through deployment, standardized interfaces allowing model portability across platforms, mature debugging and profiling tools supporting development productivity, and abundant educational resources and documentation enabling rapid skill development.

Some components advance faster than others. IBM’s NorthPole benefits from compatibility with conventional neural networks, requiring less dramatic tooling evolution. Intel’s Lava framework improves rapidly with each quarterly release. Academic research produces algorithmic advances accelerating weekly.

However, ecosystem maturity involves more than individual tool quality. It requires community formation where developers share knowledge and best practices, documentation, and training material, enabling onboarding, vendor coordination around standards and interfaces, and marketplace establishment,t providing commercial support options.

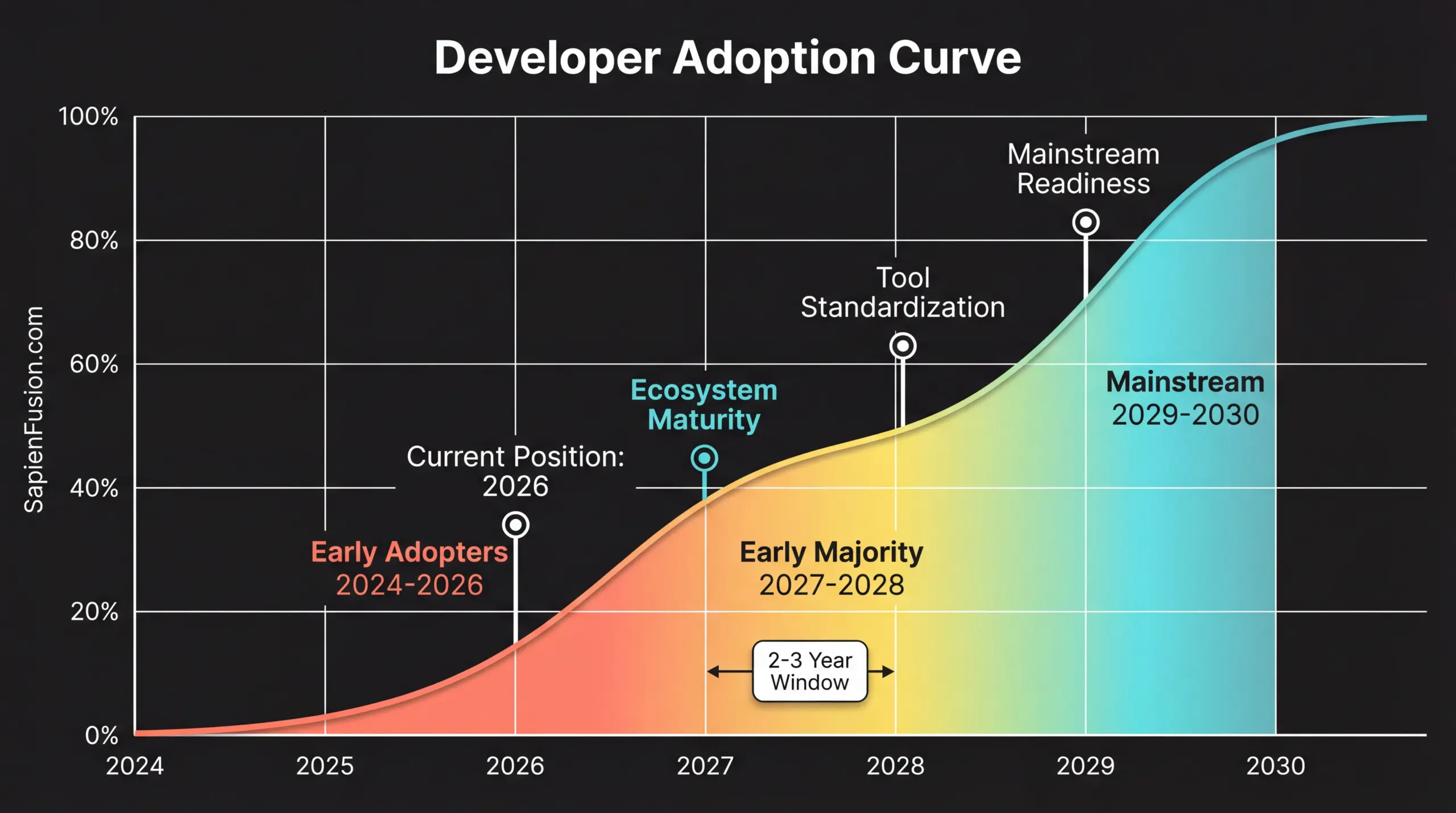

The 2-3 year timeline projects when mainstream developers can adopt neuromorphic computing without specialized expertise or acceptance of immature tooling. Organizations willing to invest in expertise development and navigate current limitations can deploy now, but face substantially higher barriers.

The Strategic Implications

The software maturity gap creates interesting strategic dynamics. Organizations adopting neuromorphic technology now accept higher development costs, longer timelines, and greater risk in exchange for competitive advantages. Those waiting for ecosystem maturity reduce development friction but concede market positioning to early movers.

The calculation depends on application characteristics and competitive dynamics. Applications where neuromorphic advantages prove decisive—battery-powered autonomy, sub-millisecond latency requirements, extreme edge deployment—justify navigating immature software. Applications where neuromorphic advantages offer incremental rather than transformative benefits reward waiting for tooling maturity.

The timeline matters significantly. Organizations beginning development now achieve production deployment in 2026-2027 as early ecosystem participants. Those waiting for mature tools begin development in 2027-2028 and deploy in 2029-2030. This 2-3 year difference determines whether organizations lead market transitions or follow established players.

The Investment Landscape

The software maturity gap attracts substantial investment. Venture funding flows toward companies building neuromorphic development tools, providing conversion services and consulting, and offering managed neuromorphic cloud infrastructure. Several well-funded startups target different aspects of the ecosystem challenge.

Major technology companies also invest. Intel maintains a substantial Lava development team, continuously expanding capabilities. IBM partners with enterprises and research institutions advancing NorthPole software. Cloud providers, including AWS, Azure, and Google Cloud, evaluate neuromorphic instance offerings, though public availability remains limited.

This investment accelerates ecosystem development beyond what organic community formation alone would achieve. The capital enables hiring skilled engineers, supporting comprehensive documentation efforts, and providing free tier access, encouraging adoption.

The Productivity Paradox

An interesting paradox emerges from the hardware-software maturity gap. Neuromorphic processors offer extraordinary efficiency, enabling previously impossible applications, yet developing for them requires substantially more engineering effort than conventional approaches. The productivity equation only favors neuromorphic when application requirements absolutely demand the capabilities.

This paradox will resolve as tools mature. Early adopters accept a productivity penalty because application requirements leave no alternative—they need 72-hour battery life or sub-millisecond latency that GPUs cannot deliver. Mainstream adoption awaits tools reaching parity with conventional workflows.

The transition mirrors historical patterns. Early GPU computing required CUDA expertise and substantial programming effort. Widespread adoption came after high-level frameworks like PyTorch abstracted low-level details. Neuromorphic follows a similar path, with early adopters accepting complexity and later adopters benefiting from mature abstractions.

The Path Forward

The software challenge resolves through multiple parallel efforts. Tool developers build comprehensive frameworks that abstract neuromorphic complexity. Academic researchers discover optimal algorithms and architectures. Standards bodies establish interfaces enabling portability. Educational institutions train new practitioners. Industry partnerships create a commercial support infrastructure.

These efforts accelerate as hardware capabilities and application demand drive investment. The ANYmal success, Mercedes-Benz commitment, and IBM efficiency demonstrations validate hardware readiness. This validation attracts talent and capital to ecosystem development, creating positive feedback and accelerating maturity.

Organizations evaluating neuromorphic adoption should consider whether their application demands justify accepting current software limitations. Those with transformative requirements should begin now building expertise and navigating immature tools. Those with incremental requirements should monitor ecosystem development and plan adoption aligned with maturity milestones.

The Reality

Neuromorphic hardware capabilities exceed software ecosystem maturity by approximately three years. This gap creates both a barrier and an opportunity. The barrier prevents easy adoption by mainstream developers. The opportunity rewards organizations willing to invest in building expertise and developing through current limitations.

The gap narrows quarterly as tools improve, documentation expands, and the community grows. The question for strategists is whether competitive dynamics demand moving before the gap closes or allow waiting until crossing becomes trivial.

For applications where neuromorphic advantages prove transformative rather than incremental, waiting means conceding market positioning to competitors navigating immature software now. For applications where advantages prove incremental, waiting reduces development costs and risks.