This is Part 3 of our 6-part Deep Dive series on neuromorphic computing—the brain-inspired processors achieving 1,000× efficiency improvements over GPUs at the edge.

← Part 2: The 1,000x Efficiency Breakthrough | Series Index | Part 4: The ANYmal Proof →

[🧠] Sapien Fusion Deep Dive Series | February 4, 2026 | Reading time: 5 minutes

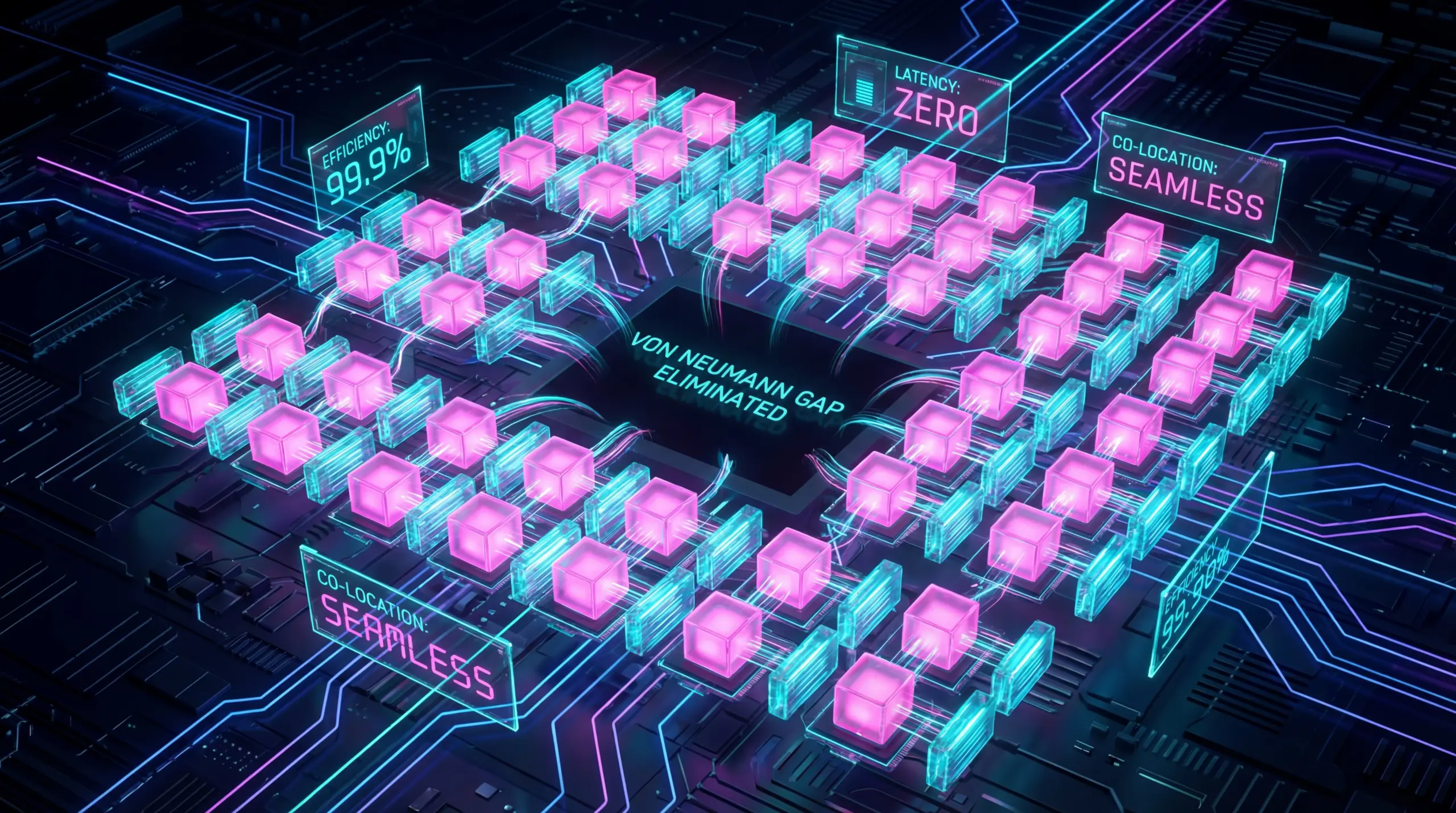

Solving the Von Neumann Bottleneck

While Intel pursued spike-based event processing, IBM Research attacked the memory bottleneck through radical architectural innovation—and achieved comparable efficiency gains through completely different mechanisms. NorthPole doesn’t process like a brain. Instead, it eliminates the fundamental inefficiency that cripples every conventional processor: the separation of memory and computation.

The Von Neumann Bottleneck Explained

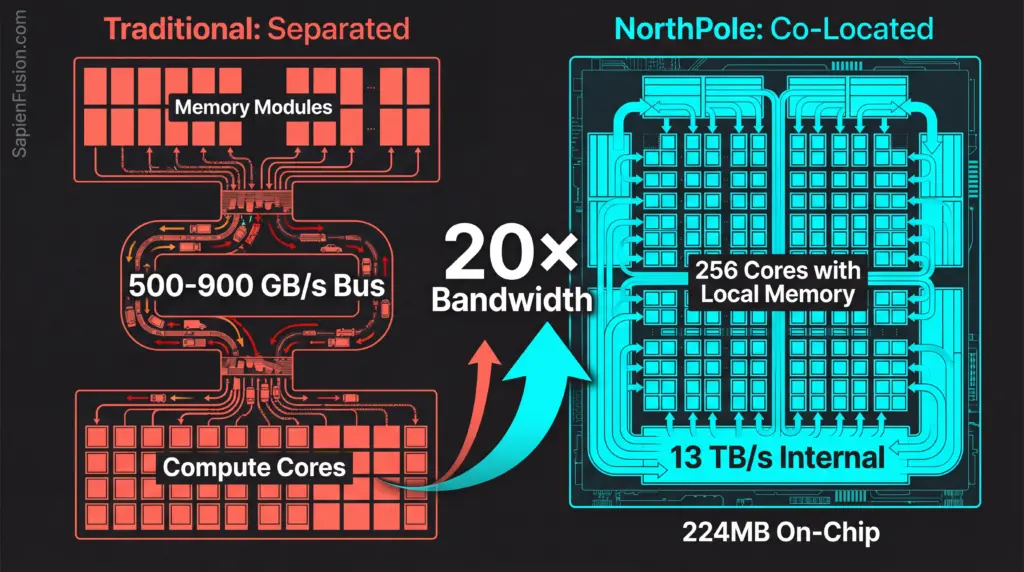

Every mainstream processor architecture—CPUs, GPUs, AI accelerators—inherits the von Neumann design where processing units live in one place, memory lives elsewhere, and a bus connects them. This architectural decision creates catastrophic inefficiency in modern AI workloads.

Neural network inference is memory-bound, not compute-bound. Processing a ResNet-50 vision model requires 4 billion multiply-add operations—arithmetic that’s cheap and fast—alongside 25.6 million parameter fetches from memory that occur repeatedly. The bottleneck isn’t performing the computations themselves, but rather fetching weights from memory to feed those computations.

The asymmetry problem compounds over time. Moore’s Law doubles processor performance every 18-24 months, while memory bandwidth improvements lag at roughly half that rate. This growing gap means processors spend increasing portions of their time idle while waiting for data. In 2010, processors idled 30% of the time waiting for memory. By 2015, that figure reached 50%. In 2020, processors idled 60-70% of the time. By 2025, the idle time climbed to 70-80%. GPUs consume full power whether actively computing or idling, waiting for memory access, resulting in massive energy waste for mediocre throughput.

NorthPole’s Solution: Memory-Compute Co-location

IBM’s architecture eliminates external memory access by co-locating compute and memory on the same silicon die. The processor integrates 256 specialized processing cores with 224 MB of on-chip memory, allocating 768 KB per core. Internal bandwidth reaches 13 terabytes per second across 22 billion transistors packed onto an 800 mm² die using a 12nm manufacturing process.

Each core contains processing elements capable of 2,048 operations per cycle alongside local memory storing model weights. Direct connections link neighboring cores without requiring external memory bus access. Entire neural network models reside on-chip, enabling inference to execute without data ever leaving the processor. Memory bandwidth is constrained only by on-chip interconnect speeds—roughly 20× faster than high-end GPU memory systems that must traverse external buses.

The Efficiency Breakthrough

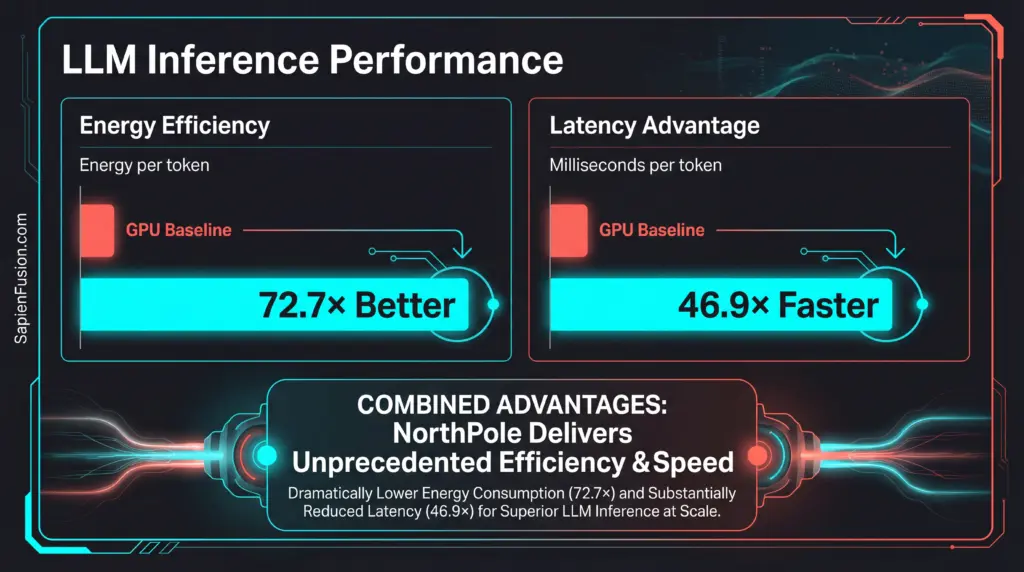

Recent IEEE Conference on High-Performance Computing results from 2024 demonstrated NorthPole’s capabilities extend beyond computer vision into language model territory—the workload where GPUs supposedly remain unassailable. Testing used a 3-billion-parameter model derived from IBM’s Granite codebase to validate performance across realistic workloads.

A single NorthPole card achieved sub-1-millisecond latency per token, delivering 46.9× lower latency than the most efficient GPU alternative while consuming 72.7× less energy. A 16-card system in standard 2U server form factor generated 28,356 tokens per second aggregate throughput while drawing just 672 watts total power. The deployment required no specialized cooling and operated within standard data center infrastructure constraints.

The economics shift dramatically at scale. NorthPole consumes 1-2 millijoules per token compared to 50-100 millijoules per token for latency-optimized GPUs. At enterprise scale, serving millions of requests daily, this efficiency differential translates to millions of dollars saved annually in electricity costs. Additional benefits include reduced cooling infrastructure requirements, increased inference throughput per rack unit, and lower carbon footprint per query.

Scale-Out Performance

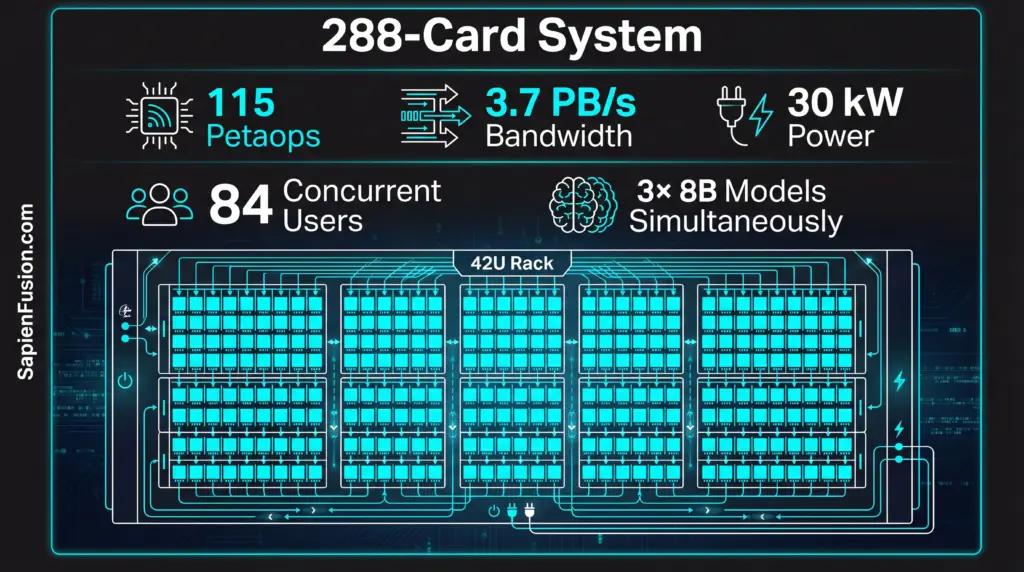

A complete 288-card NorthPole system delivers 115 petaops at 4-bit precision with 3.7 petabytes per second memory bandwidth while consuming 30 kilowatts and occupying 42 rack units. This configuration supports three simultaneous instances of 8-billion-parameter models serving 28 concurrent users per instance—84 total users—with 2.8ms per-user inter-token latency, all without requiring a custom interconnect fabric.

The deployment simplicity advantage becomes clear when comparing infrastructure requirements. GPU clusters require high-bandwidth InfiniBand or proprietary interconnects, liquid cooling infrastructure, facility power upgrades measured in megawatts, and specialized integration expertise. NorthPole deploys in standard data centers using conventional Ethernet networking, air cooling, standard power distribution, and turnkey 42U rack solutions. This operational simplicity accelerates enterprise adoption significantly compared to GPU alternatives requiring facility modifications.

Architecture Philosophy

NorthPole represents a fundamentally different design philosophy than Loihi 3. Intel’s approach uses bio-inspired spiking neurons with event-driven temporal dynamics optimized for sparse, changing data that delivers maximum efficiency on neuromorphic workloads. IBM’s approach employs conventional neural network operations with dense matrix multiplication optimized through memory-compute co-location that eliminates the bottleneck while maintaining broader workload compatibility.

Both architectures achieve massive efficiency improvements through architectural innovation. Neither is objectively “better”—they target different deployment scenarios with different optimization priorities and workload characteristics.

Production Readiness

As of January 2026, NorthPole operates under enterprise-focused controlled release with partnership programs engaging select organizations. Production allocation serves strategic customers while broader commercial availability targets mid-2026. Available form factors include single-card PCIe modules, multi-card servers in 2U and 4U configurations, complete 42U rack systems, and custom configurations for large deployments. Target markets span LLM inference serving, computer vision at scale, real-time video processing, and edge AI applications with moderate power budgets.

Competitive Positioning

NorthPole competes differently from Loihi 3 across multiple dimensions. Against GPU inference, NorthPole delivers 72.7× efficiency advantage and 46.9× latency advantage while maintaining comparable or better throughput with simpler deployment requirements. Compared to Loihi 3, NorthPole performs better for dense model inference and enables easier software migration for conventional networks, though it achieves less extreme efficiency while supporting broader model compatibility.

Market segmentation emerges clearly across use cases. Loihi 3 serves battery-powered edge deployments with extreme efficiency requirements. NorthPole targets data center inference with moderate power constraints. GPUs remain competitive for training workloads and bulk inference where efficiency matters less than raw throughput.

The Software Advantage

NorthPole’s architectural decision to support conventional neural networks creates a significant software ecosystem advantage. Models trained in PyTorch or TensorFlow deploy on NorthPole with minimal modification. Standard quantization techniques apply directly, conventional optimization strategies work without adaptation, familiar debugging and profiling tools function normally, and developers require no specialized training to begin productive work.

The contrast with Loihi 3 highlights the accessibility gap. Loihi 3 requires conversion to spiking neural networks through complex transformation processes. Developers must learn temporal dynamics concepts with steep learning curves. The tooling ecosystem remains immature with limited community resources. Organizations need specialized expertise from a scarce talent pool. This accessibility difference accelerates NorthPole adoption significantly compared to more exotic neuromorphic approaches requiring extensive developer retraining.

Real-World Deployments

Early NorthPole deployments demonstrate practical advantages across diverse applications. A major financial institution replaced an 8-GPU cluster with a 16-card NorthPole system, maintaining identical throughput while reducing power consumption by 68% and eliminating liquid cooling requirements. Annual savings exceeded $180,000 in electricity and cooling costs alone.

A manufacturing quality inspection system processing 500 frames per second transitioned from a GPU solution consuming 850W with 12ms latency to NorthPole deployment consuming 120W with 2.5ms latency. The 85% power reduction enabled deployment in space-constrained facilities previously unable to accommodate GPU thermal requirements.

Autonomous drone swarm coordination requiring low-latency decision making previously required tethered operation due to GPU power constraints. NorthPole enabled fully autonomous multi-hour missions, delivering operational capability increases exceeding 400% through power efficiency gains that eliminated tethering requirements.

Strategic Implications

Organizations deploying AI inference at scale face a decision point with significantly different strategic implications. The GPU-centric approach continues established tooling and expertise while accepting power, cooling, and cost constraints. This path requires facility infrastructure investments and maintains familiar operational patterns without forcing organizational change.

NorthPole adoption achieves 50-70× efficiency improvements while dramatically reducing infrastructure requirements. Organizations can deploy in space- and power-constrained locations previously unsuitable for AI inference and establish cost advantages over competitors, maintaining GPU-only strategies.

The competitive timeline creates first-mover advantages spanning 2-3 years. Companies beginning NorthPole evaluation in 2026 complete pilot deployments and performance validation, execute production integration and operational optimization in 2027, and achieve scale deployment with cost advantage realization in 2028. Companies maintaining GPU-only strategies watch early adopters establish efficiency advantages through 2026-2027, react to competitive pressure by beginning evaluation in 2028, and deploy NorthPole in 2029 after competitors have already optimized their operations and captured market positioning benefits.

The Memory-Compute Revolution

NorthPole proves the von Neumann bottleneck isn’t a fundamental law—it’s an architectural choice that can be eliminated through co-location. The efficiency gains aren’t incremental optimization. They represent a paradigm shift enabling enterprise AI deployment at previously impossible scales, edge AI in moderate power budgets without requiring extreme efficiency like Loihi 3, competitive cost structures for AI-as-a-service providers, and data center AI without megawatt power requirements.

For organizations running substantial AI inference workloads, NorthPole represents an inflection point. The choice is clear: maintain the GPU status quo and accept an efficiency penalty, or adopt a neuromorphic architecture and establish cost and capability advantage. The strategic window narrows as early adopters optimize operations and establish competitive positioning that becomes increasingly difficult for followers to overcome.